TELANGANA STATE GOVERNMENT TRUSTS DATAEVOLVE FOR THEIR CLOUD

TELANGANA STATE E-OFFICE APPLICATION ON AWS

What is E-Office

The eOffice aims to support Governance by ushering in more effective and transparent inter and Intra-Government processes. The vision of e-Office is to achieve a simplified, responsive, effective and transparent working of all Government Offices. The Open Architecture on which E-Office has been built, makes it a reusable framework and a standard reusable product amenable to replication across the Governments, at the Central, State and District levels. The product brings together the independent functions and systems under a single framework.

Benefits of e-Office:

- Enhance transparency – files can be tracked, and their status is known to all at all times

- Increase accountability – the responsibility of quality and speed of decision making is easier to monitor.

- Assure data security and data integrity.

- Provide a platform for re-inventing and re-engineering the Government.

- Promote innovation by releasing staff energy and time from unproductive procedures.

- Transform the Government work culture and ethics.

- Promote greater collaboration in the workplace and effective knowledge management.

BUSINESS CHALLENGES

- Decentralised Local datacentre management in multiple countries globally.

- Efforts put forth towards the validation, review & planning on Capex for all fixed assets.

- Difficulty in managing the Software and hardware licenses across multiple datacentres spread across different countries.

- Each country was equipped with their own set of Storage Hardware, storage allocation was not optimised, and no standard procedure was followed which led to increased Capex investments.

- More man force required to manage the storage devices.

- Scaling the environment with changing storage needs was difficult

- More than 10 backup tools were used, making the backup and restore unmanageable as data size grows with lengthy backup window and slow recovery times.

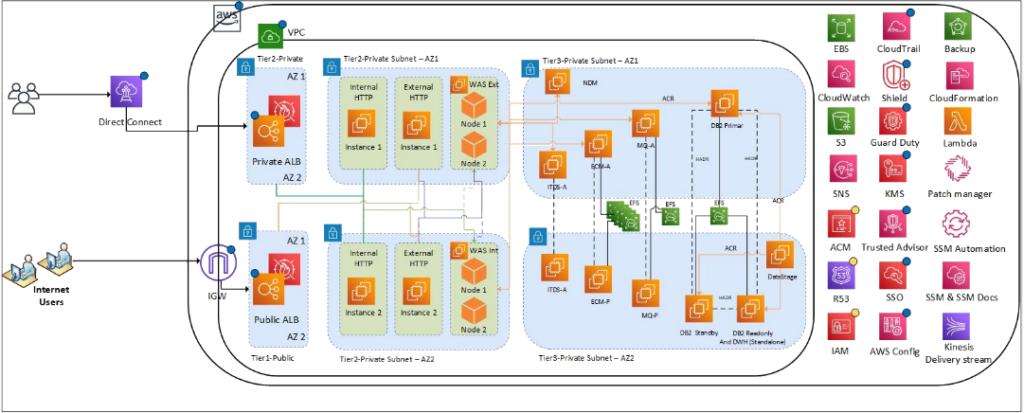

SOLUTION PROPOSED

- Migrate to AWS and use standardised tools & software across all countries. This made central service delivery possible, which reduced spend on resource numbers and overall compliance to security, monitoring etc. were ensured.

- Hosting the databases in Ec2 instances with the EBS volumes attached as per the requirement. Scaling the volumes by monitoring the CloudWatch/splunk metrics.

- Secure and robust backup and restoration solutions easily built with combining S3 /S3-IA and tools like Commvault and n2ws .

- Automation using custom scripts and AWS lambda written in boto3 to delete the unattached volume reducing the manual work and saving cost associated with unused volumes.

- Fully automated backup management using custom scripts, Commvault and n2ws and S3 snapshots.

- Provide a highly secured solution using the right access policies, data encryption of AWS storage services.

- Custom solutions for overcoming incompatibility of different storage vendors with AWS platform.

- Automated data movement between AZ for data redundancy is done for business-critical systems.

- Provide separate environment for development, testing and production.

- Provision entire infrastructure using CICD pipelines. [Jenkins, GitHub is used.]

BUSINESS BENEFITS

- Location Independent Agile delivery model recognized as a standard of excellence in software development. Centralized management of the IT infrastructure hosted on AWS Cloud reduced the Opex by 50%.

- Consolidation of more than 10 backup tools into single backup tool helped in having a Reliable and proven DR solutions in place within AWS cloud.

- Decommission of more than 70 storages devices associated to multiple storage vendors by moving into AWS managed storage service.

- 100% Scalability of storage in the AWS cloud using various storage services like EBS and S3.

- Telangana E-Office now has a future-proof, cloud-based digital core that fully integrates the company’s global IT operations, leverages centralized global management and monitoring mechanisms, standardizes, and automates processes, and features an enhanced security framework including intrusion detection and prevention systems. Increased scalability and agility give Telangana E-Office the ability to roll out new services, and always provide seamless client experiences.

- Migrated more than 600 IT applications globally from legacy data centres to the AWS cloud in a time span of less than 12 months at Telangana E-Office. Being one of the most ambitious and fastest AWS cloud migrations and setting a new benchmark for digital transformation at this scale.

HOW CLOUD TRANSFORMATION IS HELPING TELANGANA E-OFFICE GROW

- Telangana E-Office was able to extend the support for business needs in a much faster and timely manner.

- Business needs were fulfilled in shorter duration in comparison to weeks/months in the datacentre world.

- Hosting the IT Infrastructure Cloud is cost effective and, also helps to perform endless POCs and finalize solutions as it reduces dependencies over procurement of hardware & software as in traditional datacentres.

- Cloud infrastructure laid a foundation for Telangana E-Office data transformation and application modernization program.Monetization of data and building of micro services are made possible with this cloud migration program.

STORAGE SERVICES USED

Primary Storage

Telangana E-Office has achieved better IOPS performance, throughput for its available applications with use of EBS and S3 as primary storage.

EBS

- EBS volume is the main storage used having around 60 Terabytes.

- Databases instances running on PGSQL that is hosted on the EC2 instances with the EBS volumes attached as per the requirement.

- Different EBS volume types (IO1/GP2/ST1/SC1) are used based on the workloads. The choice of the volume type is finalized by performing load test of the applications in the production Environment, monitoring the cloud watch metrics like Volume Status, Burst Balance, and rightsizing of the EC2 instance and EBS volumes are done.

- Switching of GP2 volumes to GP3 are taken forward for better performance and cost optimization [instead of increasing the volume of GP2, GP3 can be used]

- Data in the EBS volumes are secured by the below ways:

- Encryption at rest with ServerSideEncryption using Amazon Managed keys [KMS].

- Encryption operations occur on the servers that host EC2 instances, ensuring the security of both data-at-rest and data-in-transit between an instance and its attached EBS storage.

- All the snapshots of the encrypted EBS volumes inherits the encryption scheme.

- EC2 instance in the EBS volumes are attached are also restricted with IAM role-based access.

- Automation is in place to trigger alert for unattached volume, custom scripts using lambda written in boto3 is used to delete the unattached volume. [A volume can be unattached for 7 days then automatic deletion carried over and snapshot is kept for 30days]

- Audit tool has been built using Splunk which will raise an incident if volume or snapshot is unencrypted.

S3 and S3-IA

- All backed up data including Snapshots, transactional logs are stored in S3.

- Infrequently restored data is placed in S3-IA.

- S3 stores around 1 petabyte of data. Central backup account hosts S3 and S3-IA only, and the data is stored on S3/S3-IA within the same region.

- Below are the best practices followed in place for S3 and S3-IA.

- Encryption of data at rest through AWS KMS Customer Master Keys

- Restrictive bucket policies are used for S3.

- S3 bucket (resource level) policy to restrict access to specific IAM users and roles.

- S3 bucket policies enforce HTTPS request which will ensure data at transit encryption

- Alert is configured to report in case of unauthorized access/deletion of the S3.

- Only root user can access the critical S3 buckets.

- Also audit tool built with Splunk will raise an incident if the S3 bucket is open to public.

- In some places S3 versioning is enabled to recover objects from accidental deletion or overwrite.

- Backup of snapshots into S3 or S3-IA is taken care by Commvault and n2ws.

- Amazon provided snapshot service is leveraged for taking all types RDS backup

EFS

- All backed up data including Snapshots, transactional logs are stored in S3.

- Infrequently restored data is placed in S3-IA.

- S3 stores around 1 petabyte of data. Central backup account hosts S3 and S3-IA only, and the data is stored on S3/S3-IA within the same region.

- Below are the best practices followed in place for S3 and S3-IA.

- Encryption of data at rest through AWS KMS Customer Master Keys

- Restrictive bucket policies are used for S3.

- S3 bucket (resource level) policy to restrict access to specific IAM users and roles.

- S3 bucket policies enforce HTTPS request which will ensure data at transit encryption

- Alert is configured to report in case of unauthorized access/deletion of the S3.

- Only root user can access the critical S3 buckets.

- Also audit tool built with Splunk will raise an incident if the S3 bucket is open to public.

- In some places S3 versioning is enabled to recover objects from accidental deletion or overwrite.

- Backup of snapshots into S3 or S3-IA is taken care by Commvault and n2ws.

- Amazon provided snapshot service is leveraged for taking all types RDS backup

Backup/Restore

The workloads or data across Amazon EC2, EBS and RDS is carried over by Commvault, n2ws and Aws backup service. A single backup account is used to store backup data in S3 and S3 Standard-IA for all the accounts.

The backup:

- EBS snapshots or entire Ec2 instance backup are taken on daily or weekly basis using Commvault as far as the application requirement.

- File System backups are taken on daily and weekly basis.

- The DB transactional backups are taken varying from 1 to 4 hours depending on the criticality of the DB. The backups are taken using native agents [e.g., Oracle using RMAN].

- Amazon RDS automated snapshots are taken using the amazon provided native backup feature.

- Multivolume backups are taken using Commvault IntelliSnap at AMI level. Commvault preserves the metadata of the multiple volumes while taking the backup.

Restoration:

- The transaction log backed up on the selected restore time can be restored from S3 and applied to the database.

- File level restoration and volume level restoration is also handled.

- Automated process in place to restore a DB using pipeline.

How secured is the Backup Data?

- Internally built audit tool using Splunk ensures the following and sends an alert in the below cases.

- Backup Snapshot is unencrypted

- Backed up Snapshot is not tagged as per the standard defined.

- Snapshot older than 30 days are alerted.

- Snapshots backups are cleaned automatically up based on the automatic retention policy.

- Alert is configured in case of unauthorized access / deletion of the S3 where the backup data resides.

BCDR [Business Continuity/Disaster Recovery]:

- N2WS and Commvault is used as for DR solutions.

- Based on the application type and its criticality DR strategy is built.

Platinum applications [Zero RTO & RPO]: Fully automated DR with zero outage and zero data loss where the business services are recovered to a different AZ in same region.

Gold applications [1-4 hours RTO & 1-8 hours RPO]: With few manual steps using the Automation pipelines or DR replica tools with acceptable data loss where the business services are recovered to a different AZ in same region.

Silver applications [ Both RTO & RPO 1Day]: Fully manual process to recover from Commvault to a different AZ.

Bronze applications: Within AZ level so no recovery.

- DR is conducted using n2ws, Commvault, Jenkins, GitLab using the pipelines.

- Every application is tested once in a year for DR.

ARCHIVAL

- The DB transactional logs, Snapshots and or any backup data taken via Commvault/n2ws are stored in S3 and the infrequently restored are placed to S3 Standard-IA.

- S3 Standard-IA was chosen over glacier or S3 for archiving because in most of the places live database tracks the historical information and given minimal data that is archived as of today. If requirement comes up in future, then we will explore moving the data into glacier.

Performance and Metrics monitoring:

- Splunk is used for monitoring and analytics of EBS, S3, EC2.

- Data are collected by Splunk agents installed on Ec2 instances and through Splunk api by enabling addon for AWS.

- Cloud watch metrics is used to monitor EBS burst balance and logs are send to Splunk and are monitored to scale the volumes.

- The metrics like CPU, disk utilization, memory utilization is collected by Splunk agents installed in the Ec2 instances.

- Internal audit tool is built in the Splunk to monitor the metrics which triggers an alert as once the threshold is reached and the incident is acted upon.

Logging:

- Cloud trail logs, Aws Service logs [WAF, Shield] are managed by Cloudtrail.

- All the logs are monitored in the Splunk, and alerts are created when threshold is reached.

Cost Optimization:

- All are reserved instances, and few are on demand, there is flexible reservations [for around 3 – 5 years].

- Ec2 instances are right sized using based on the utilization report collected in Splunk.

- Splunk Monitors the EBS volume usage and analyses metrics to identify Gp2 volumes that are apt for migration to the Gp3 volume. This will reduce the costs maintaining the same performance.

- S3 size is optimized by deduplication of backup data using Commvault.

- The data transfer is not in place across regions and high availability applications will have data synchronous within AZ.

LESSONS LEARNT

Slowness of the application through DB due to the high IOPS and throughput.

The performance was improved by monitoring the IOPS, throughput and burst balance depreciation constantly using cloud health and cloud metrics and reports are send to Splunk where an alert is triggered which is being addressed that in turn increases the performance.

This testing must be done to decide on the EBS volume to be used before the application has been deployed to production.

Measure the data in S3 which needs to be backed up and in case if the data will be infrequently accessed move to S3 Standard IA instead of keeping in S3.

Enhanced feature called power management which powers on the server based on the requirement i.e. only when the backup is planned the server is powered on and powered off when not needed.

Recent Posts

- How we revamped all GAIL Gas intranet applications and rolled out new applications.

- UX Design Trends: That Will Rule 2021

- TELANGANA STATE GOVERNMENT TRUSTS DATAEVOLVE FOR THEIR CLOUD

- APMDC BUILDS A FUTURE-READY ENTERPRISE WITH DATAEVOLVE

- AAROGYASRI BUILDS A FUTURE-READY INFRASTRUCTURE WITH DATAEVOLVE