AWS Storage Gateway Virtual Tape Library | BACKUP YOUR TAPES to the CLOUD

AWS Tape Gateway for Backing Up and Archiving On-Premises Data To The Virtual Tapes In Cloud

Magnetic tape system has been around for nearly 70 years and it is one of the longest lasting storage media type that is still being used to this day. The first tape was created by IBM which had the capacity to store up to 1.4 Mega Bytes of data and was 720 meters long when it was first introduced in the year 1952 😲

Over the years, the tapes have become smaller in size and denser in terms of storing more data and in addition to that there have been a lot of changes in terms of storage formats. Otherwise, there has not been a dramatic change in the tape evolution. It is still the same old technology that is being used for the magnetic tapes.

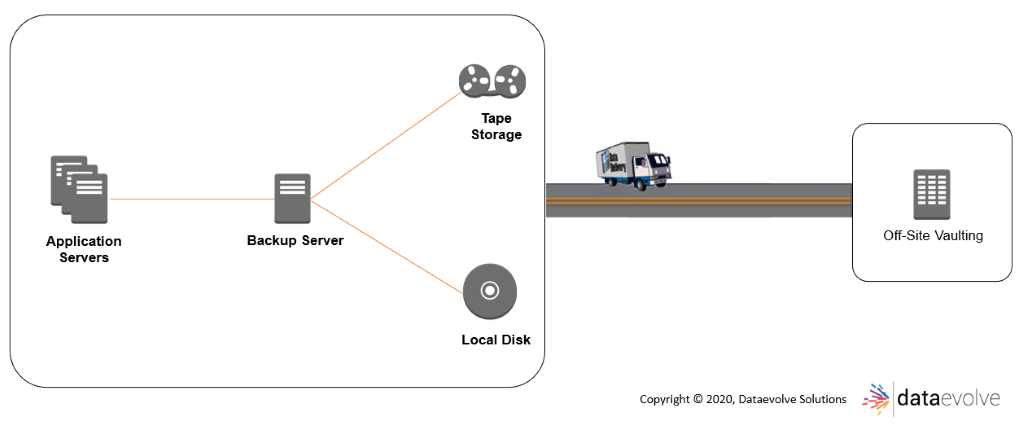

To this day tape remains as one of the biggest contenders in the backup storage model. There are a few pitfalls to be looked up on to use this backup technology. Heavy usage of tapes can cause deformation and the tapes eventually must be replaced over time especially if you use them daily. On top of it there is dirt and moisture, putting these tapes into the tape drive, taking them out and sending them off site which can affect in reading the data. And we then need to insert a cleaning tape manually to clean the box. A dedicated staff is needed to manage the tape media, rotate the tapes, and reclaim unused capacity. Someone constantly needs to monitor tape backups and reset them if they fail. Offsite tape vaulting is an essential part of any disaster recovery strategy which requires a human effort to move tapes from places and this process is prone to a small degree of failure.

In this cloud era where bandwidths are no longer a bottleneck, we finally have a possibility to eliminate the human involvement to a much lesser degree by replacing the physical tapes with virtual tapes. We not only save cost and reduce inventory maintenance by not purchasing physical tapes but will also make sure that the backups in the cloud are highly durable with cost-effective storage.

With Tape backups to the cloud we are eliminating

- Physical Damage of the tapes.

- Human Intervention.

- Minimising Operational Overhead.

- No need for reserved floor space in an off-site bunker.

- Enable on-demand retrieval of tapes without any operational overheads.

- The list continues…

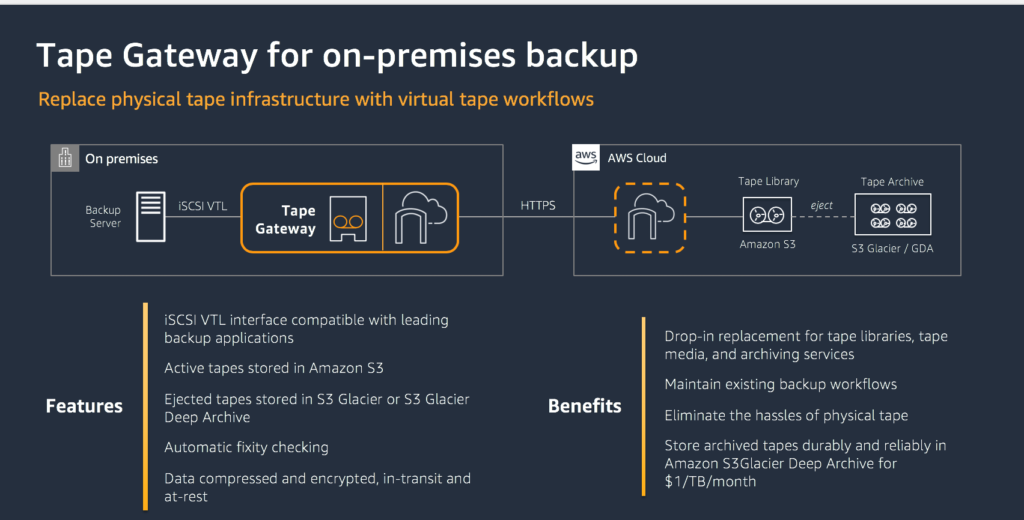

AWS Storage Gateway – Tape Backup (VTL) can seamlessly work with most of the leading backup and replication software in the market. Tape Gateway can be integrated with Amazon S3 Glacier and S3 Glacier Deep Archive storage classes which provide you a secure and durable data retention. Tape retrieval time of Amazon S3 Glacier storage class is between 3 to 5 hours whereas its 12 hours for Amazon S3 Glacier Deep Archive storage class. If you are looking for a long term data retention and digital preservation where data is accessed once or twice a year, you would want to go for Deep archive storage class which reduces your monthly storage cost by 75 percent. Comparing to offsite data tape warehousing, AWS provides you eleven lines of data durability, fixity checks by AWS on regular basis and data encryption.

Enough of theory and lets deep dive into the technical side of it. Ready?

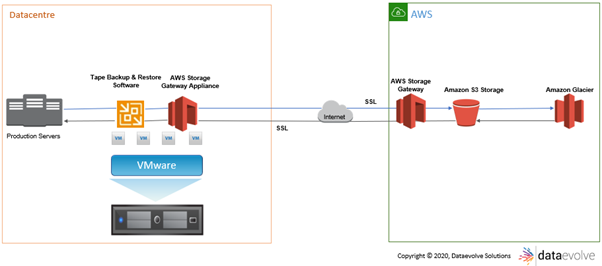

For Creating a Storage gateway, you need a storage gateway appliance. This appliance can be run on an amazon ec2-instance or run on an on-prem hypervisor of VMware ESXi and Microsoft Hyper-V with recommended memory size and CPU sizing as per the AWS documentation.

Planning

Proper planning is an important step for any successful implementation. Knowing the requirements and sizing recommendations prior to deployment will save a lot of time and potential issues down the road. AWS has a deployment guide to assist in the planning of the gateway appliance. When deploying a gateway in VTL mode, it will need two disks. One disk for an upload buffer and one disk for cache storage. It is imperative that these disks are sized appropriately for data that is going to be written to the gateway. Planning and sizing these disks correctly will reduce the chance of performance issues down the road.

When planning for deployment, the first step will be to check the hardware and storage requirements needed to deploy. To deploy a VTL gateway you will need the following minimum resources:

- 4 vCPU

- 16GB of RAM

- 80GB of disk space for VM image and system disk

Buffer Disk The upload buffer provides a staging area for the data before the gateway uploads the data to Amazon S3. Your gateway uploads this buffer data over an encrypted Secure Sockets Layer (SSL) connection to AWS.

Cache Disk The cache storage acts as the on-premises durable store for data that is pending upload to Amazon S3 from the upload buffer. When your application performs I/O on a volume or tape, the gateway saves the data to the cache storage for low-latency access. When your application requests data from a volume or tape, the gateway first checks the cache storage for the data before downloading the data from AWS.

Buffer and Cache Disk Planning

Before deploying the appliance, we should carefully plan out the Upload Buffer and Cache disks which are the lifeline for this solution to work.

In addition to the 80GB system disk, you will need to add two other disks to the gateway during deployment. These disks will be used for the buffer and cache mentioned above. The sizing of each disk is measured by a formula provided by Amazon. The drives have the following minimum and maximum sizing:

- Cache Disk – Minimum 150GB / Maximum 16TB

- Upload Buffer – Minimum 150GB / Maximum 2TB

It is important to note that these do not have to be single disks. You can add multiple disks to reach the maximum sizes.

CALCULATION OF THE UPLOAD BUFFER DISK

- Application Throughput = The rate at which your applications write data to your gateway.

- Network Throughput to AWS = The rate at which your gateway can upload data to AWS (Maximum upload rate to AWS gateway is 120MB/s)

- Compression Factor = The amount of compression used by the gateway. Two would be a safe number to use.

- Duration of Writes = The amount of time it takes to write data to the appliance. (For example: The amount of time it takes for the tape job to run)

CALCULATION OF THE CACHE DISK

The cache buffer uses a formula of 1.1 times the size of the upload buffer. Now that you have figured out the disks sizing needs of the gateway appliance, we can now begin the installation.

SECURING THE VTL GATEWAY

When deploying anything in Neuland Labs data center, security is utmost priority. The AWS storage gateway uses specific ports and communication paths depending upon the deployment type.

The gateway requires access to the following endpoints to communicate with AWS. If you use a firewall or router to filter or limit network traffic, you must configure your firewall and router to allow these service endpoints for outbound communication to AWS.

anon-cp.storagegateway.region.amazonaws.com:443

client-cp.storagegateway.region.amazonaws.com:443

proxy-app.storagegateway. region.amazonaws.com:443

dp-1.storagegateway. region.amazonaws.com:443

storagegateway.region.amazonaws.com:443

AWS S3 DEEP ARCHIVE

S3 Glacier Deep Archive is Amazon S3’s lowest-cost storage class and supports long-term retention and digital preservation for data that may be accessed once or twice in a year. It is designed for customers particularly those in highly regulated industries, such as the Financial Services, Healthcare, and Public Sectors that retain data sets for 7-10 years or longer to meet regulatory compliance requirements. All objects stored in S3 Glacier Deep Archive are replicated and stored across at least three geographically dispersed Availability Zones, protected by 99.999999999% of durability, and can be restored within 12 hours.

Key features of Deep Archive:

- Designed for durability of 99.999999999% of objects across multiple Availability Zones

- Lowest cost storage class designed for long-term retention of data that will be retained for 7-10 years

- Ideal alternative to magnetic tape libraries

- Retrieval time within 12 hours

- S3 PUT API for direct uploads to S3 Glacier Deep Archive, and S3 Lifecycle management for automatic migration of objects

S3 Glacier Deep Archive can be leveraged with the storage gateway in two methods. As shown earlier when you are creating tapes in the AWS console, you have the option of putting backing those tapes by Glacier or Glacier Deep Archive.

The difference between the two, besides the cost, is recoverability and potential charges for early restores. Glacier has a retrieval time of from minutes to hours while Deep Archive can be restored within 12 hours. Both classes have fees for early retrieval as they are meant for archival and retention.

Key Takeaways:

- Sizing the Upload Buffer Disk and Cache Disk is the key for a successful implementation.

- There are many effective ways to backup data on virtual tapes instead of shipping to a costly vault. We feel with less cost, up time and on demand retrieval along with high availability; This is a perfect solution for anyone who is willing to improve efficiency and high availability.

Thank you for reading, Did you or your organisation try implementing this?, What are the biggest obstacles you think will crop up. we here at Dataevolve have expertise in various technologies and can help you with it. If you have anything to add please send a response or add a note!

Happy Savings on your TCO 💰!

HAVE QUESTIONS? FEEL FREE TO REACH OUT TO US HERE

Recent Posts

- How we revamped all GAIL Gas intranet applications and rolled out new applications.

- UX Design Trends: That Will Rule 2021

- TELANGANA STATE GOVERNMENT TRUSTS DATAEVOLVE FOR THEIR CLOUD

- APMDC BUILDS A FUTURE-READY ENTERPRISE WITH DATAEVOLVE

- AAROGYASRI BUILDS A FUTURE-READY INFRASTRUCTURE WITH DATAEVOLVE